CS 180 Project 1: Colorizing the Prokudin-Gorskii Collection

Written by Aaron Zheng

Overview

The goal of this project is to align images representing the blue, green, and red color channels into one image, solving the problem of aligning these images in the most pleasing way for the viewer.

This project is very meaningful because it solves a very important problem in the past, which is the inability to take color pictures using regular cameras, instead only allowing black and white. 100 years ago, a Russian color photography pioneer named Sergei Mikhailovich Prokudin-Gorskii solved this problem by taking 3 black and white images using a red, green, and blue filter respectively. He'd hoped of ways to align these images using projectors installed with different color filters, but he wasn't able to find a solution during his time of much limited technology. Now, with my Computer Vision knowledge, I am able to assist in Sergei, helping him reconstruct his images through this project.

First Attempts

The first attempt, I created a very unaligned image, as I was looking at just simply reading the red, green, and blue intensities from the provided image, and directly combining them.

Unaligned Image

Then after that I realized that I had to do simple scaling, and the fundamental problem was to solve the misalignment. This led me to write a simple scaling function, which managed to align the smaller images.

I first started by writing the metric (evaluation) functions, which will evaluate the similarity of images as one of those images is being shifted along a search window (NCC and L2). Major bugs I fixed was forgetting about cropping (which lead to very problematic images for the monastery.jpg), as well as confusing whether to maximize or minimize L2 and NCC scores.

Then, after that, I tried to use the naive method on the "melons.tif" image, trying to align that image. With a modest search window of [-15, 15] for both the height and width dimension, the code did not finish running even after 10 minutes, which made me realize that this is not a good approach.

Intermediate Steps

I realized that the reason for such slow running times is due to how big the "melon.tif" image is, roughly 3000x3000, which is much bigger than the smaller images, only around 300x300.

Hence, I began to build my image pyramid approach. I created 2 arrays of images, where the first item in the array is the original image, and each following image is the previous image, scaled down by a factor of 2 on both the height and width axes. The last image is an image with height that is within 80 pixels.

Then, starting from the smallest scaling (i.e., the last two images from these two arrays), we do the single-scale approach, find the pixel shifting of the first image that leads to the best alignment using the approaches. Once this is done, we repeat this procedure with the second-smallest scaling. Only this time, we pre-shift these images with the shift produced by the first (smallest) scaling, multiplied by 2.

Now we calculate the shift again, adding this shift to the pre-shift, and multiply it by 2 again to get the new pre-shift, for the new pair of images. And, repeating this, we eventually get the desired shifting of the first image, as well as the final aligned color image.

Major bugs I fixed here were:

- Accidentally multiplying the final (correct) shift for the first image by 2, leading to a very misaligned image.

- Changing the alignment from the red image and green image aligned with the blue image, to the red image and blue image aligned with the green image.

- For `train` image, I had to increase the `search_window` from 3 to 4 in order to align the image properly.

Since the red and blue are the least aligned images, and the green image seems to be between them, by aligning relative to the green image, instead of the blue image, I was able to obtain much better results (especially for the "emir.tif" image).

Bells And Whistles

For this section, I implemented an automatic contrast scaling function, which automatically scales blue, green, and red images such that the maximum intensity is always 1.0 and the minimum intensity is always 0.0. I did this by subtracting the minimum intensity from the blue, green, and red images, and scaling the images such that the maximum intensity in all three of the blue, green, and red images are all equal to 1.0.

This helped, as it significantly increased the contrast of the images, making them brighter and more apparent. However, this effect doesn't seem to be that striking, or noticable in this case.

Here are some of the sample images. The left is with the automatic contrast scaling, and the right is without.

With Contrasting

Without Contrasting

As can be seen, the effects of the contrasting is not entirely that visible. This shows most of the images in the data.zip is well calibrated from the start.

All Results

Here are my results:

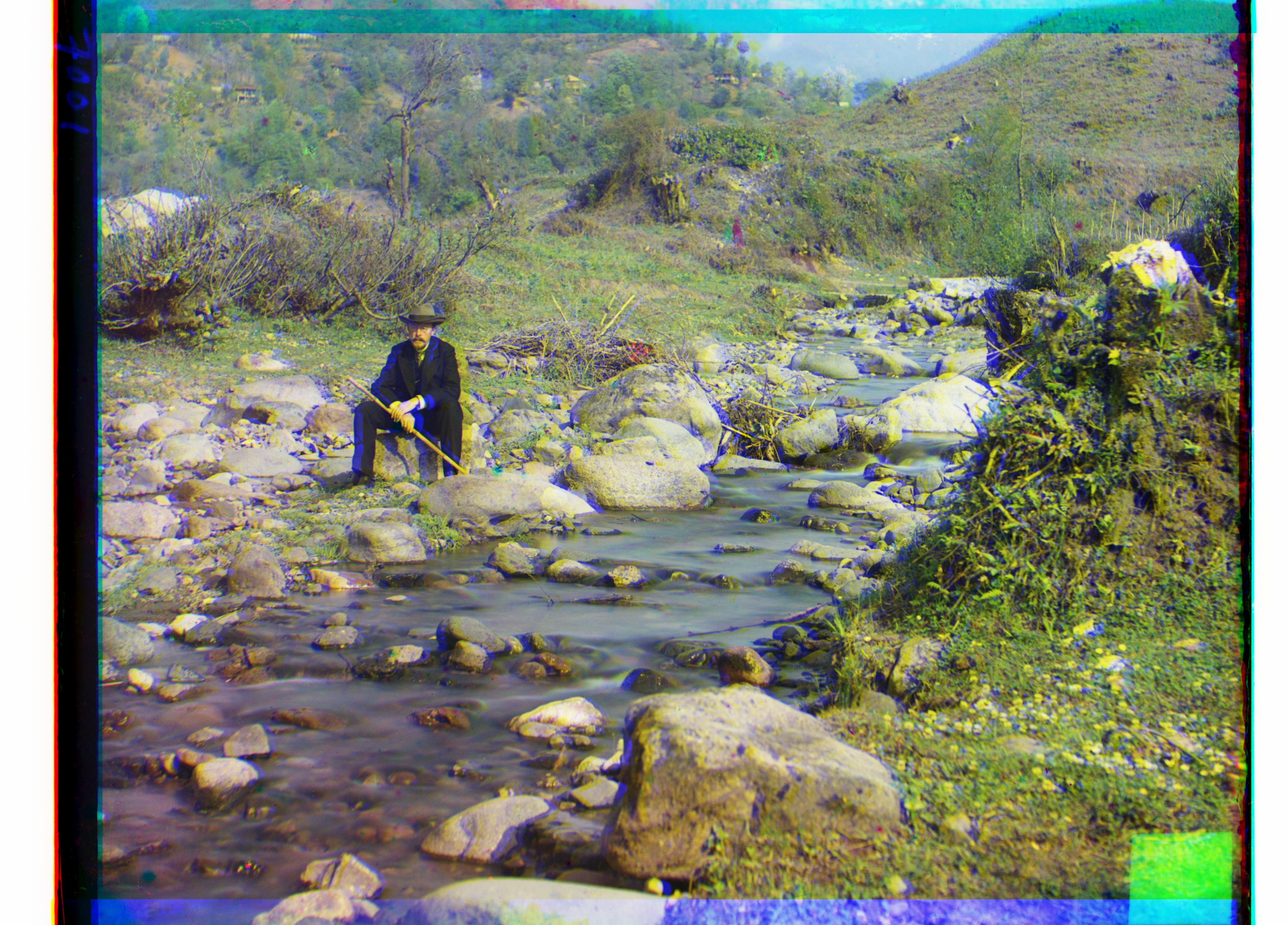

Harvesters

L2

(Relative to Green) Blue= (-58, -11) Red = (65, -3)

NCC

(Relative to Green) Blue= (-58, -11) Red = (65, -3)

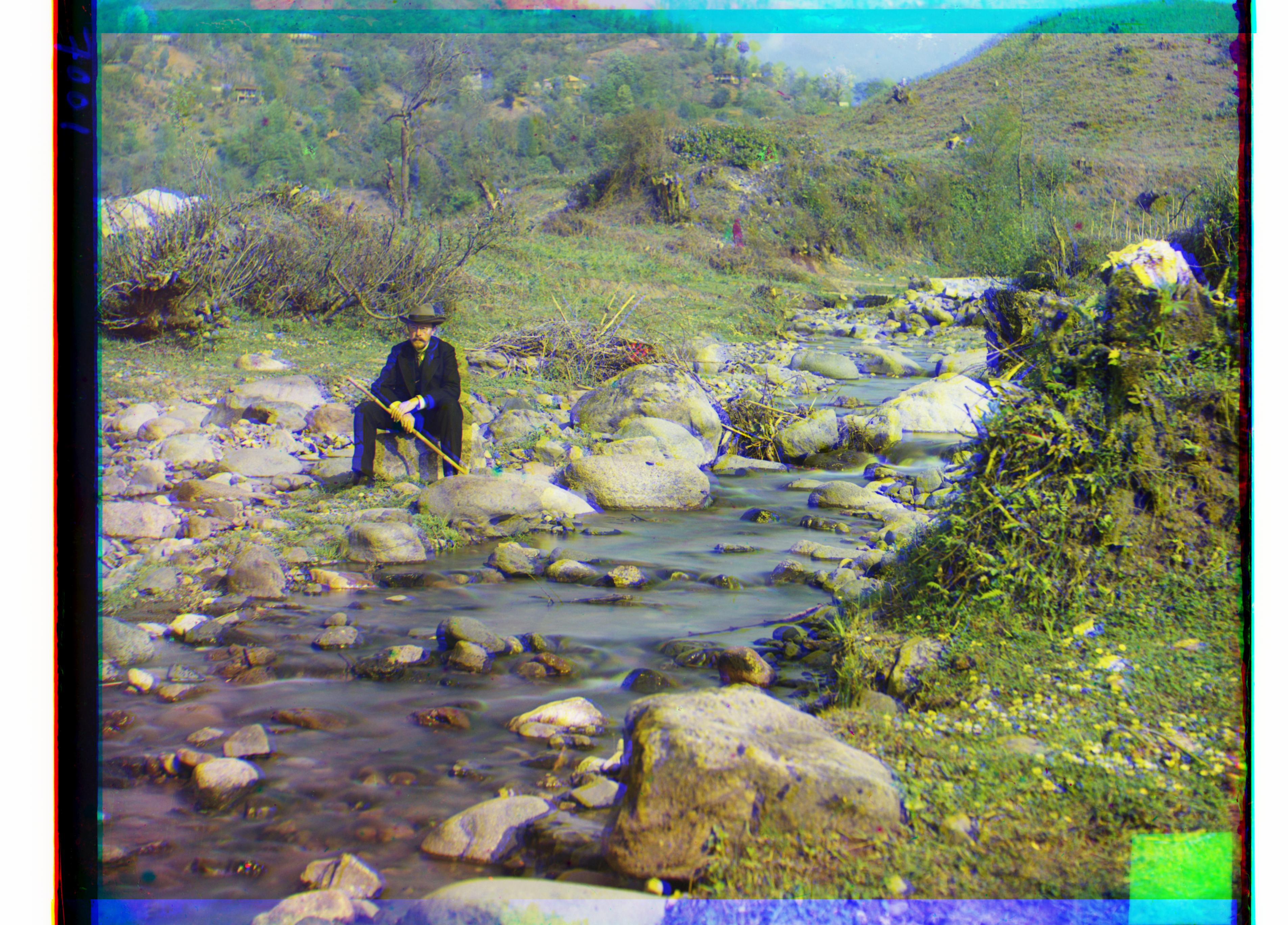

L2

(Relative to Green) Blue= (-40, -16) Red = (48, 5)

NCC

(Relative to Green) Blue= (-40, -16) Red = (48, 5)

L2

(Relative to Green) Blue= (-44, 6) Red = (64, -9)

NCC

(Relative to Green) Blue= (-44, 6) Red = (64, -9)

L2

(Relative to Green) Blue= (-81, -4) Red = (96, 3)

NCC

(Relative to Green) Blue= (-81, -4) Red = (96, 3)

L2

(Relative to Blue) Green = (-3, 1) Red = (3, 2)

NCC

(Relative to Blue) Green = (-3, 1) Red = (3, 2)

L2

(Relative to Green) Blue= (-52, -23) Red = (57, 10)

NCC

(Relative to Green) Blue= (-52, -23) Red = (57, 10)

L2

(Relative to Green) Blue= (-33, 11) Red = (107, -16)

NCC

(Relative to Green) Blue= (-33, 11) Red = (107, -16)

L2

(Relative to Green) Blue= (-76, 1) Red = (98, 7)

NCC

(Relative to Green) Blue= (-76, 1) Red = (98, 7)

L2

(Relative to Green) Blue= (-52, -6) Red = (58, 0)

NCC

(Relative to Green) Blue= (-52, -6) Red = (58, 0)

L2

(Relative to Blue) Green = (3, 2) Red = (6, 3)

NCC

(Relative to Blue) Green = (3, 2) Red = (6, 3)

L2

(Relative to Green) Blue= (-41, 2) Red = (43, 25)

NCC

(Relative to Green) Blue= (-41, 2) Red = (43, 25)

L2

(Relative to Blue) Green= (6, -1) Red = (12, -1)

NCC

(Relative to Blue) Green= (6, -1) Red = (12, -1)

L2

(Relative to Green) Blue= (-24, 3) Red = (33, -6)

NCC

(Relative to Green) Blue= (-24, 3) Red = (33, -6)

L2

(Relative to Green) Blue= (-45, -9) Red = (56, 13)

NCC

(Relative to Green) Blue= (-45, -9) Red = (56, 13)